Published on December 18, 2009 | Last Updated on September 13, 2025

After introducing the basic differences between BIM Capability and BIM Maturity in Episode 11, and briefly discussing the many available and relevant maturity models in Episode 12, this post introduces a new specialized tool to measure BIM performance: the BIM Maturity Index (BIMMI).

This episode is available in other languages. For a list of all translated episodes, please refer to https://bimexcellence.org/thinkspace/translations/. The original English version continues below:

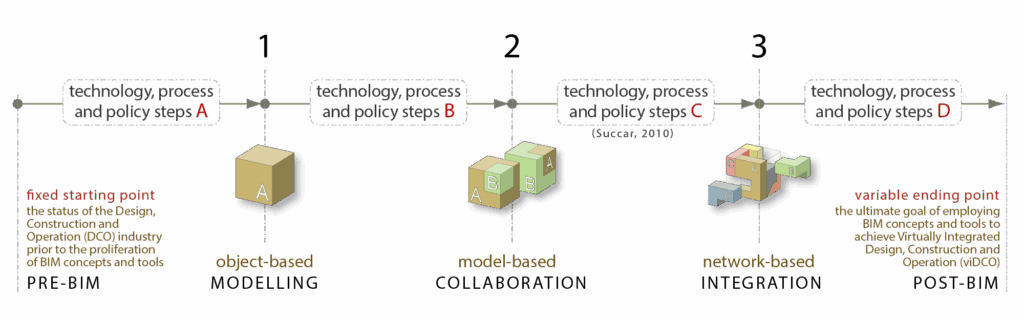

As an additional reminder, BIM Capability is the basic ability to perform a task or deliver a BIM service/product. BIM Capability Stages (or BIM Stages) define the minimum BIM requirements – the major milestones that need to be reached by a team or an organization as it implements BIM technologies and concepts (Refer to Episode 8 or Figure 1 below). Having a ‘measuring tape’ to establish BIM capability is important because it is a quick yet accurate assessment of an organization’s ability to deliver BIM services. For example, using Capability as a metric, we can safely establish that an organization at Stage 3 is able to deliver more BIM services to a client or project-partner than an organization at Stage 1 or 2:

However, since BIM Capability Stages are established when minimum requirements are met; they cannot assess abilities (or lack of) beyond these minimum requirements. As a case in point, when using the Capability metric, two organizations using Tekla to primarily generate model-based steel details are said to be at BIM Stage 1. This is a useful bit of information because it sets these two organizations apart from all others still using CAD but tells us very little about their delivery speed, data richness or modelling quality. In fact, the two organizations may well be many experience-years apart without that being detected by the Capability scale. That’s why another metric (Maturity) is needed to assess and report on significant variations within service delivery and their underlying causes.

The term ‘BIM Maturity’ refers to the quality, repeatability and degrees of excellence of BIM services. In other words, BIM Maturity is the more advanced ability to excel in performing a task or delivering a BIM service/product. Without measuring these qualities, there is no way of differentiating between ‘real’ abilities to deliver BIM services from blatant BIM wash.

To address this issue, the BIM Maturity Index[1] (BIMMI) has been developed by investigating and then integrating several maturity models from different industries[2]. BIMMI is similar to many Capability Maturity Models (CMM) discussed in Episode 11 but reflects the specifics of BIM technologies, processes and policies.

BIMMI has five distinct Maturity Levels: (a) Initial/Ad-hoc, (b) Defined, (c) Managed, (d) Integrated and (e) Optimized. In general, the progression from lower to higher levels of BIM Maturity indicates (i) better control through minimizing variations between targets and actual results, (ii) better predictability and forecasting by lowering variability in competency, performance and costs and (iii) greater effectiveness in reaching defined goals and setting new more ambitious ones[3, 4]. Figure 2 below visually summarizes the five Maturity Levels or “evolutionary plateaux”[5] followed by a brief description of each level:

- Maturity Level a (Initial or Ad-hoc): BIM implementation is characterized by the absence of an overall strategy and a significant shortage of defined processes and policies. BIM software tools are deployed in a non-systematic fashion and without adequate prior investigations and preparations. BIM adoption is partially achieved through the ‘heroic’ efforts of individual champions – a process that lacks the active and consistent support of middle and senior management. Collaboration capabilities (if achieved) are typically incompatible with those of project partners and occur with little or no pre-defined process guides, standards or interchange protocols. There is no formal resolution of stakeholders’ roles and responsibilities.

- Maturity Level b (Defined): BIM implementation is driven by senior managers’ overall vision. Most processes and policies are well documented, process innovations are recognized and business opportunities arising from BIM are identified but not yet exploited. BIM heroism starts to fade in importance as competency increases; staff productivity is still unpredictable. Basic BIM guidelines are available including training manuals, workflow guides and BIM delivery standards. Training requirements are well-defined and are typically provided only when needed. Collaboration with project partners shows signs of mutual trust/respect among project participants and follows predefined process guides, standards and interchange protocols. Responsibilities are distributed and risks are mitigated through contractual means.

- Maturity Level c (Managed): The vision to implement BIM is communicated and understood by most staff. BIM implementation strategy is coupled with detailed action plans and a monitoring regime. BIM is acknowledged as a series of technology, process and policy changes which need to be managed without hampering innovation. Business opportunities arising from BIM are acknowledged and used in marketing efforts. BIM roles are institutionalized and performance targets are achieved more consistently. Product/service specifications similar to AIA’s Model Progression Specifications[6] or BIPS’ information levels[7] are adopted. Modelling, 2D representation, quantification, specifications and analytical properties of 3D models are managed through detailed standards and quality plans. Collaboration responsibilities, risks and rewards are clear within temporary project alliances or longer-term partnerships.

- Maturity Level d (Integrated): BIM implementation, its requirements and process/product innovation are integrated into organizational, strategic, managerial and communicative channels. Business opportunities arising from BIM are part of team, organization or project-team’s competitive advantage and are used to attract and keep clients. Software selection and deployment follows strategic objectives, not just operational requirements. Modelling deliverables are well synchronized across projects and tightly integrated with business processes. Knowledge is integrated into organizational systems; stored knowledge is made accessible and easily retrievable[8]. BIM roles and competency targets are embedded within the organization. Productivity is now consistent and predictable. BIM standards and performance benchmarks are incorporated into quality management and performance improvement systems. Collaboration includes downstream players and is characterized by the involvement of key participants during projects’ early lifecycle phases.

- Maturity Level e (Optimized): Organizational and project stakeholders have internalized the BIM vision and are actively achieving it[9]. BIM implementation strategy and its effects on organizational models are continuously revisited and realigned with other strategies. If alterations to processes or policies are needed, they are proactively implemented. Innovative product/process solutions and business opportunities are sought-after and followed-through relentlessly. Selection/use of software tools is continuously revisited to enhance productivity and align with strategic objectives. Modelling deliverables are cyclically revised/optimized to benefit from new software functionalities and available extensions. Optimization of integrated data, process and communication channels is relentless. Collaborative responsibilities, risks and rewards are continuously revisited and realigned. Contractual models are modified to achieve best practices and highest value for all stakeholders. Benchmarks are repetitively revisited to ensure highest possible quality in processes, products and services.

In a future post, I’ll shed more light on the detailed BIM Competencies[10] that Capability and Maturity tools actually measure. For now, I’ll provide a sample BIM Performance Assessment summary generated using both metrics. Please note that – although the assessment below is based on my consultancy work – it has been significantly altered so that the ‘assessed’ organization cannot be identified. I’ve also removed most Performance Achievements (the useless positives), focused on Performance Challenges (the beneficial negatives) and added some explanatory notes [enclosed in brackets].

Sample Performance Assessment – Executive Summary

“…upon concluding a preliminary assessment of [organization name], the overall organizational BIM Performance has been tentatively established at 1a [Capability Stage 1, Maturity Level a] pending the provision of [specific artefacts]…

The [organization name] has been established at Capability Stage 1 […because it] has actively employed [BIM software tool name] to generate [X number of projects] over the past [Y months/years] at a [utilization rate of Z%]…[other metrics]…none of these projects were collaborative with the exception of [pilot project name]…

The [organization name] has been established at Maturity Level a based on [a specific Maturity scoring system]….BIM Performance Achievements have been detailed in [document name] while BIM Performance Challenges have been detailed in [document name]…below is a summary of these Performance Challenges [grouped under the three main types of BIM Competencies]:

- Technology: Usage of software applications is unmonitored and unregulated [different software tools are used although they generate very similar deliverables]. Software licence numbers are misaligned to staff requirements. 3D Models are mostly relied upon to only generate accurate 2D drawings [the data richness within the model is not being exploited]. Data usage and storage are not well defined. Hardware specifications are generally adequate but are non-uniform. Some computers fall well-below confirmed staff skills and their expected BIM deliverables [equipment replacement and upgrades are mostly treated as cost items – postponed whenever possible and committed-to only when unavoidable]. With respect to Networks, currently adopted solutions are not well integrated into the workflow [individuals and teams use whatever tools at hand to communicate and share files]. While there is an Intranet with a dedicated BIM section, the content is mostly static and not well suited to harvest, store and share knowledge [very few staff have administrative rights (or motivation) to upload information to the intranet].

- Process: Senior leaders/managers have varied visions about BIM, and its implementation is conducted without a consistent overall strategy [as typical at this maturity level, BIM is treated as a technology stream with minimal consideration for its process and policy implications]. Change resistance is evident among staff [and possibly wide-spread amongst middle management]. The workplace environment is not recognized as a factor in increasing staff satisfaction/motivation [found to be not conducive to productivity – think of noise, glare and ergonomics]. While knowledge is recognized as an organizational asset, it is mainly shared between staff in an informal fashion [through oral tips, techniques and lessons learned]. Business opportunities arising from BIM are not well acknowledged. BIM objects [components, parts or families] are not consistently available in adequate numbers or quality. 3D model deliverables [as BIM products] suffer from too high, too low or inconsistent levels of detail. At the time of this assessment, it appears that more importance is given to [visual] quality of 2D representations than is given to 3D model accuracy [also, products and services offered by the organization represent a fraction of the capabilities inherent within the software tools employed]. There are no [overall] modelling quality checks or formal audit procedures. BIM Projects are conducted using undocumented and thus inconsistent practices [there are no project initiation or closure protocols]. Staff competency levels are unmonitored by [and thus unknown to] management, BIM roles need clarification [roles are currently ambiguous] and team structures pre-date BIM. Staff training is not well structured and workflows are not well understood [in one instance, staff were not systematically inducted into BIM processes; in another, were confused about workflows and ‘who to go to’ for technical and procedural assistance]. Performance is unpredictable [management cannot predict BIM project duration or HR costs] and productivity appears to still depend on champions’ efforts within teams. A mentality of ‘shortcuts’ [working around the system] has been detected. Performance may be inconsistent as it is neither monitored nor reported in any systematic fashion [as typical at this Maturity Level, the organization had islands of concentrated BIM productivity separated by seas of BIM idleness/confusion].

- Policy: The organization does not yet document its detailed BIM standards or workflows. There are no institutionalized quality controls for 3D models or 2D representations. The BIM training policies are not documented [current training protocols are out-dated] and auxiliary educational mediums are not provided to staff [training DVDs and the like]. Contractually, there is no BIM-specific risk identification or mitigation policy.

The above assessment summary may not provide a glossy image of an aspiring BIM-enabled organization. However, such a list of challenges – pointed and revealing as it is – will help the organization’s management to identify where it needs to invest time and energy to enhance its BIM performance.

In summary, understanding Capability, Maturity and how to use both metrics to assess BIM Competencies can assist AECO stakeholders to determine their overall BIM performance levels. Once performance assessments are made, performance improvements will soon follow.

Updated Oct 23, 2015: A video is now available explaining the Point of Adoption model on the BIM Framework’s YouTube channel:

Updated May 10, 2016: The model is now published as “Succar, B. and Kassem, M. (2016), Building Information Modelling: Point of Adoption, CIB World Congress, Tampere Finland, May 30 – June 3, 2016″ – download: https://bit.ly/BIMPaperA9

References:

- Note that I opted to use the term BIM Maturity Index rather than Model to avoid confusion.

- Succar, B. (2009) Building Information Modelling Maturity Matrix. IN Underwood, J. & Isikdag, U. (Eds.) Handbook of Research on Building Information Modelling and Construction Informatics: Concepts and Technologies, Information Science Reference, IGI Publishing.

- Lockamy III, A., & McCormack, K. (2004). The development of a supply chain management process maturity model using the concepts of business process orientation. Supply Chain Management: An International Journal, 9(4); pages 272-278.

- McCormack, K., Ladeira, M. B., & Oliveira, M. P. V. d. (2008). Supply chain maturity and performance in Brazil. Supply Chain Management: An International Journal, 13(4); pages 272-282.

- SEI. (2008). People Capability Maturity Model – Version 2, Software Engineering Institute / Carnegie Mellon. Retrieved October 11, 2008, from https://www.sei.cmu.edu/cmm-p/version2/index.html.

- Refer to 2008 AIA California Council, Model Progression Specifications (https://bit.ly/AIAMPS 70KB PDF document).

- Refer to 2008 Danish Government’s BIPS, Digital Construction 3D Working Method https://bit.ly/BIPS3D 2.2MB PDF).

- Refer to the 4 levels in knowledge retention in Arif, M. et al. (2009), Measuring knowledge retention: a case study of a construction consultancy in the UAE. Engineering, Construction and Architectural Management, 16(1); pages 92-108.

- Nightingale, D.J. and J.H. Mize (2002), Development of a Lean Enterprise Transformation Maturity Model. Information Knowledge Systems Management, 3(1): p. 15.

- A definition of BIM Competencies has been provided in Episode 12 (endnote 2). You can also use the blog’s custom search engine to find it.

Cite as: BIMe Initiative (2025), 'Episode 13: the BIM Maturity Index', https://bimexcellence.org/thinkspace/episode-13-the-bim-maturity-index/. First published 18 December 2009. Viewed 3 March 2026

Where is the “content” aspect of the maturity index?

The BIM Maturity Index, as a standardized measurement tool, is intentionally designed to be separate from the ‘content’ it assesses. The most important reasons behind this separation are the shear amount of content – what is expected to be available, achieved or delivered at each Capability Stage and Maturity Level – the index will be applied-to and the need to simplify the assessment process.

BIMMI (unlike other Capability Maturity Models) is designed to uniformly apply to the full spectrum of BIM Technologies, Processes and Policies covering the full lifecycle of projects/facilities (Design, Construction and Operations’ phase). Industry players like architects, engineers, contractors and facility managers need to complete many steps and fulfil certain requirements before they can deliver BIM products and services. Other steps are needed to increase the quality of these products and services. Some of these requirements/deliverables are common across markets, disciplines and organizational sizes while others are quite unique to a specific market, discipline or size.

So….Infusing the index with the ‘content’ related to all the above would have made it very cumbersome, rigid and would have certainly reduced its scalability. That’s why the ‘content’ is collated separately outside the index under what I call BIM Competency Sets. These will be discussed in future posts.

Cool article!

We are currently working on a BIM Quickscan to test on what maturaty level a company is working (and on what issues they can impove).

We came to (almost) exactly the same results!

Would be nice to discuss some of these subjects with you!

Kind regards,

Leon van Berlo

Thanks Leon,

I have checked http://bit.ly/QuickScan and found the information provided quite interesting. I definitely would like to discuss BIM assessment/ improvement with you and the TNO team. I’ll send you an email shortly so we can organize a voice call or a web-meeting.

Dear Bilal,

A very informative episode. Thank you for sharing.

Dear Leon,

It would be nice if you can send a link to a paper/article that forms the background for this TNO model.

Happy new year to you both 🙂

Best Regards,

Umit Isikdag

Bilal,

Have a look at http://www.bimquickscan.nl as well (use the google translate feature).

I’m looking forward to hear from you.

Regards,

Léon

Umit,

Have a look at http://www.bimquickscan.nl as well (use the google translate feature).

I’m looking forward to hear from you.

Regards,

Léon

Leon,

Thank you for that.

BIMQuickScan looks very promising. ThinkSpace readers are advised to check it out (http://bit.ly/QuickScanEng)

I’ve added the translated site to BIMSearch.net although it’s not being picked up properly by Google’s custom search engine. I’ll try to investigate that later on.

P.S. I look forward to meeting you at the 2010 CIB Congress so we can exchange notes.

Hi Bilal,

I have with great interest read your blog post Episode 13 about BIM Maturity Index.

I am involved in a Danish development project where we are developing a new version of the bips information level specification (part of your reference 7) and would like to know if you have come across other similar initiatives in your research or practical work. I am familiar with the U.S. Model Progression Specification method but would like to know if there are other similar initiatives or practical experience with MPS etc. elsewhere in the world.

Any ideas, references, links, case studies, scientific papers etc. would be very helpful.

Regards,

Kristian

Hi Kristian,

Thank you for your comment. I’m not aware of any other initiative or academic work similar to AIA’s Model Progression Specifications or BIPS’ information levels. I personally haven’t used BIPS’ classification in my consultancy work but did earlier try to use LODs (E202 – 2008) on a large collaborative BIM project. I faced a few issues with how LODs are structured which prompted me to develop a modified information classification system. I’ll be discussing it in the next BIM Episode; please stay tuned.

Bilal

Salam Alaikum Bilal.

I think I was one of the first to reference your BIM maturity stages in my literary research work. Now I find more and more references of your work on BIM implementation and achieving maturity. Recently I read a journal paper published in Science Direct which is repeatedly referring to your coined terms in the BIM world, specially Object based modelling, Modelling based integration and Network based collaboration.

You have already created a niche in the world of BIM. Keep working hard…

Link to the paper is below.

http://www.sciencedirect.com/science/article/pii/S0926580512000234)

Regards

Imran

Wa Alaikum Assalam Imran…Thank you for your kind words and the reference provided. The three BIM capability stages you’ve mentioned are based on minimum, quantifiable requirements and are thus easy to understand and use. Although I’m happy some of my research has been referenced in peer-reviewed journals, I actually get more satisfaction when I learn of organizations adopting BIM Capability Stages and – more importantly – BIM Maturity Levels as a basis for their implementation efforts. I know of a few but I’m hoping my next research-based project (http://www.BIMexcellence.net) will convince more organizations to adopt these stages/levels to measure and improve their BIM performance. Thanks again.

Hello Mr. Bilal,

I am Mervyn and I am a Filipino (from the Philippines) taking up my Master’s in Bangkok. My research is about BIM maturity assessment for the development of an implementation guideline in the Philippines. I read about your maturity and I am quite interested in using your model because you have expressed a very detailed and flexible tool. I wish to thank you for providing such tools and concepts about BIM. Keep it up! 🙂

informative.

http://uonlibrary.uonbi.ac.ke/